The field of machine learning is constantly evolving, with scientists facing numerous tradeoffs in developing brain-like systems that can efficiently perform complex tasks. One of the key challenges has been the balance between speed, power consumption, and the ability to learn and perform tasks accurately. Traditional artificial neural networks have shown promise in learning language and vision tasks, but the training process is slow and energy-intensive. Researchers at the University of Pennsylvania have made significant strides in overcoming these limitations by creating a novel analog system that is fast, low-power, scalable, and capable of learning complex tasks.

The newly developed contrastive local learning network represents a significant advancement in the field of analog machine learning. Unlike traditional electrical networks, this system is designed to be more scalable and efficient, with the ability to learn nonlinear tasks such as “exclusive or” relationships (XOR) and nonlinear regression. The key feature of this system is that the components evolve based on local rules without requiring knowledge of the overall network structure. This approach mirrors the way neurons in the human brain operate, where learning emerges without the need for centralized control.

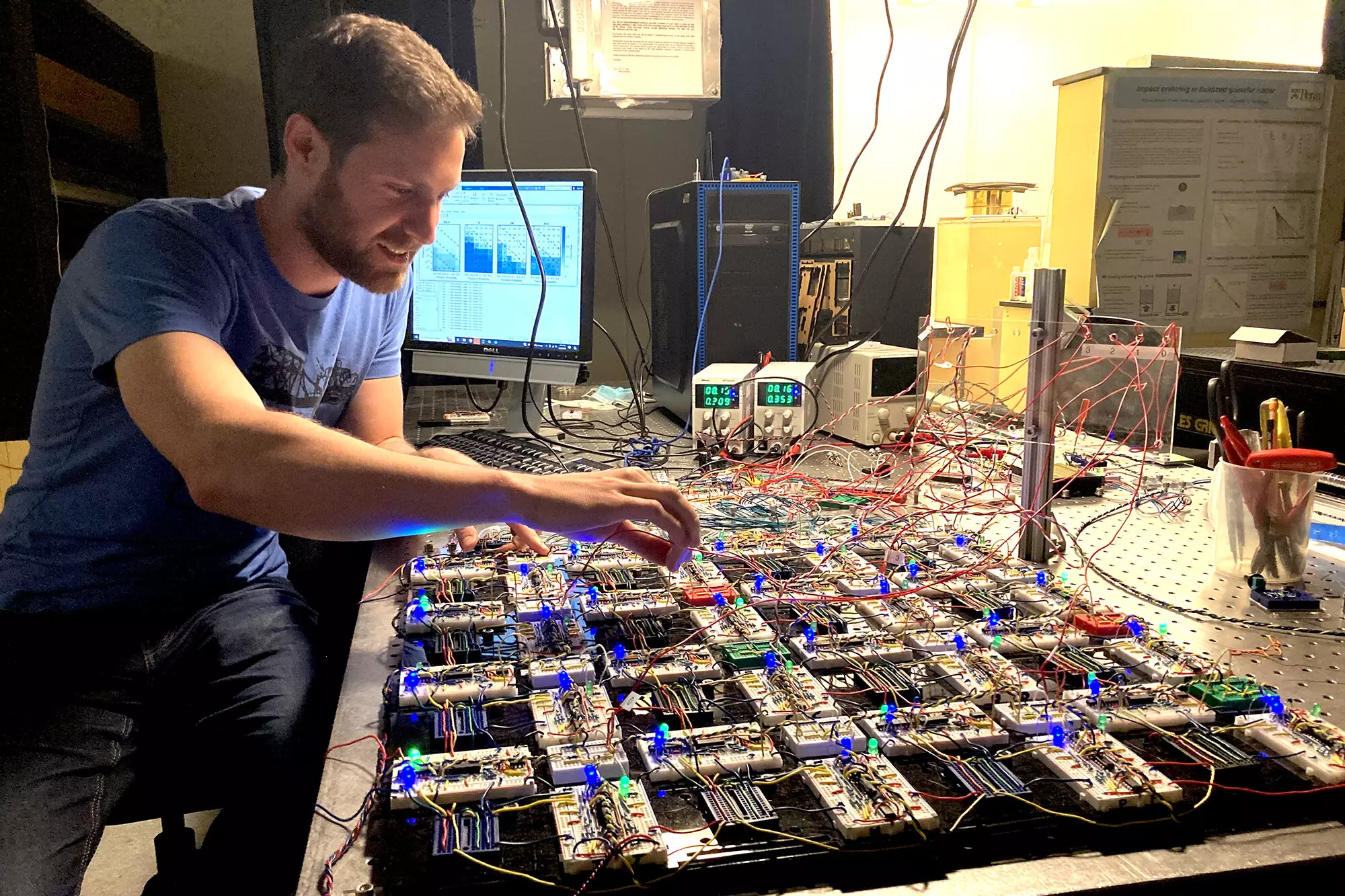

According to physicist Sam Dillavou, a postdoc in the research group, the contrastive local learning network can perform useful tasks similar to computational neural networks, but with the added advantage of being a physical object. The system’s inherent tolerance to errors and robustness in different implementations make it an ideal model for studying a wide range of problems, including biological systems. This system could also have practical applications in interfacing with devices like cameras and microphones, enabling efficient data processing.

The research behind the contrastive local learning network is built upon the Coupled Learning framework developed by physicists Andrea J. Liu and Menachem Stern. This framework allows physical systems to learn tasks based on applied inputs, using local learning rules and without the need for a centralized processor. By applying this paradigm to analog machine learning, the researchers have created a system that adapts to external stimuli and learns autonomously.

One of the key focuses of the researchers is scaling up the design of the contrastive local learning network. Questions around memory storage duration, noise effects, network architecture, and nonlinearity optimization are currently under investigation. As engineering professor Marc Miskin points out, the scalability of learning systems remains a significant challenge, with uncertainties about how capabilities evolve as systems increase in size. The potential for this research to pave the way for new advancements in machine learning is substantial.

The development of the contrastive local learning network represents a significant breakthrough in the field of analog machine learning systems. By combining principles of physics and engineering, the researchers have created a system that is not only fast, low-power, and scalable but also capable of learning complex tasks autonomously. As the researchers continue to explore the scalability and applications of this system, the potential for future innovations in machine learning is immense.

Leave a Reply