In recent years, there has been a significant advancement in the development of deep neural networks (DNNs) by computer scientists to address various real-world tasks. However, studies have shown that these models can exhibit unfair behavior due to biases in the data they were trained on and the hardware platforms they were deployed on.

The Role of Hardware in AI Fairness

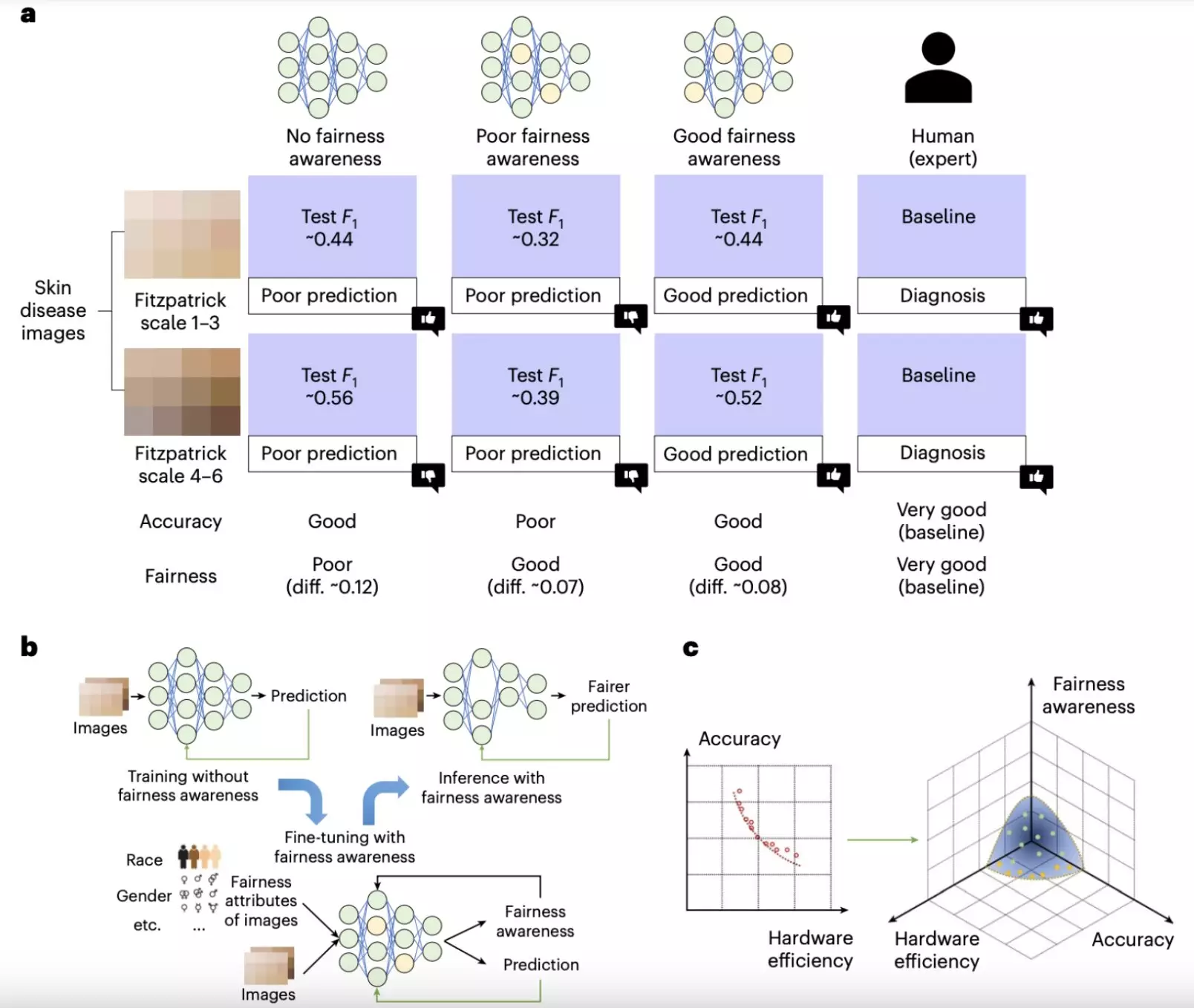

Researchers at the University of Notre Dame have recognized the importance of hardware systems in contributing to the fairness of AI. Their recent study published in Nature Electronics delves into the ways emerging hardware designs, such as computing-in-memory (CiM) devices, can impact the fairness of DNNs. The study aims to address the urgent need for fairness in AI, particularly in high-stakes domains like healthcare, where biases can have serious consequences.

The study by Shi and his colleagues involved experiments to explore how hardware-aware neural architecture designs of varying sizes and structures affect the fairness of AI results. They found that larger, more complex neural networks, which typically require more hardware resources, tend to exhibit greater fairness. However, deploying these models on devices with limited resources posed challenges. To overcome this, the researchers proposed strategies such as compressing larger models to retain performance while reducing computational load.

Another set of experiments focused on non-idealities in hardware platforms, such as device variability and stuck-at-fault issues associated with CiM architectures. The researchers examined how changes in hardware, like memory capacity and processing power, influenced the fairness of AI models. They discovered trade-offs between accuracy and fairness under different hardware setups, emphasizing the need for noise-aware training strategies to improve model robustness and fairness without significantly increasing computational demands.

Implications for Future AI Development

The findings from the study underline the crucial role hardware plays in determining the fairness of AI models. It suggests that larger, resource-intensive models generally perform better in terms of fairness but require advanced hardware. The research team also highlights the importance of considering both software algorithms and hardware platforms when developing AI tools for sensitive applications. This holistic approach could lead to the creation of AI systems that are accurate and equitable across diverse user demographics.

Future Research Directions

Moving forward, the researchers plan to delve deeper into the intersection of hardware design and AI fairness. They aim to develop cross-layer co-design frameworks that optimize neural network architectures for fairness while addressing hardware constraints. Additionally, adaptive training techniques will be explored to ensure AI models remain fair regardless of the devices they run on. The research team also looks to investigate how specific hardware configurations can be tuned to enhance fairness, potentially paving the way for new classes of devices designed with fairness as a primary objective.

The study by Shi and his colleagues sheds light on the critical relationship between hardware design and AI fairness. By acknowledging the impact of hardware on the performance and fairness of AI models, researchers can work towards developing more equitable and accurate AI systems. This comprehensive approach to AI development has the potential to transform various industries and ensure that AI technologies benefit all individuals equally.

Leave a Reply