Artificial Intelligence (AI) has significantly transformed many sectors, from healthcare to finance, primarily due to its ability to analyze vast amounts of data. However, this revolution is not without limitations. At the heart of these challenges lies the so-called von Neumann bottleneck, which constrains the fluidity of data processing in AI models. The bottleneck arises when there is a mismatch between the speed of data movement and the processing capabilities of AI algorithms. As datasets grow exponentially, traditional methods struggle to keep up, hampering the efficiency of machine learning processes, specifically through operations such as matrix-vector multiplication (MVM).

Recent research spearheaded by Professor Sun Zhong from Peking University addresses these critical challenges, proposing an innovative solution in the form of dual-in-memory computing (dual-IMC). This breakthrough could be a game changer in how we not only approach machine learning but also in enhancing the energy efficiency of conventional data operations. The dual-IMC model notably shifts away from the conventional single-in-memory computing (single-IMC) framework, which has significant limitations related to data transportation and consumption of power, often leading to inefficiency in processing.

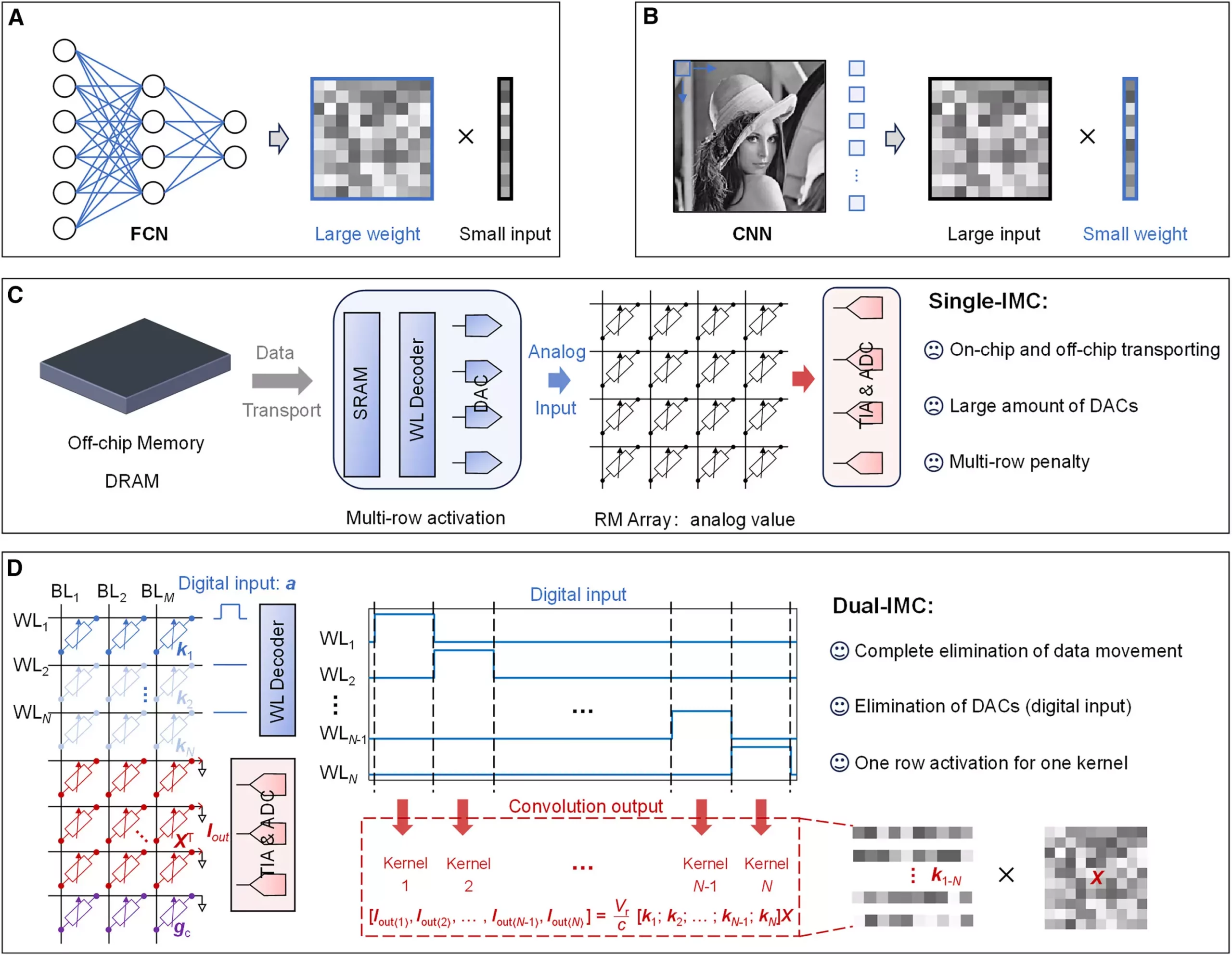

The single-IMC method typically stores neural network weights in memory chips but requires external data input—often leading to delays and increased energy consumption when transferring data across chips. This process incorporates cumbersome digital-to-analog converters (DACs) that not only complicate the overall architecture but also add to the power expenditure. In contrast, the dual-IMC framework innovatively stores both the neural network weights and the input data within the same memory array, allowing for in-memory computations and significantly reducing the time lost in movement of data, while cutting down on power consumption.

To validate the practical applications of dual-IMC, the research team conducted extensive testing using resistive random-access memory (RRAM) devices, primarily focusing on tasks like signal recovery and image processing. The foundational premise behind dual-IMC is to leverage the innate advantages of in-memory computations, which boost efficiency and reduce latency. By embedding all necessary data operations within a single memory architecture, the model eliminates costly transitions that typically occur between different types of memory, such as static and dynamic random-access memory.

The implications of this research extend beyond raw efficiency. By compressing components and eliminating the need for DACs, the dual-IMC scheme is projected to lower production costs and minimize the circuitry footprint. As a result, systems designed with dual-IMC technology could become more sustainable, requiring less energy and material resources, addressing both environmental concerns and economic feasibility.

As we stand on the precipice of a data-driven future, novel solutions like the dual-IMC model could pave the way for a new era of computing architecture that meets the demanding requirements of modern-day AI applications. The findings presented by Professor Zhong and his team highlight a pivotal step towards overcoming existing technology barriers and optimizing AI data processing methods. With the rise of employing dual-IMC systems, researchers and engineers could unlock unprecedented capabilities in machine learning, ensuring that data processing keeps pace with our rapidly evolving digital landscape.

The dual-IMC framework does not merely address the von Neumann bottleneck; it offers a holistic solution to a variety of computing challenges posed by increasingly sophisticated machine learning models. As demand for advanced data processing capabilities escalates in this digital age, the applications of such innovative technologies could extend far beyond AI, revolutionizing how we interact with data, ultimately fostering an environment ripe for breakthroughs in various fields.

Leave a Reply