Human emotions represent an intricate tapestry that is often challenging to decode. The multifaceted nature of emotional experiences complicates efforts to identify and interpret feelings accurately. Unlike machines that can execute tasks with precision, human emotions are influenced by a myriad of factors like context, personal history, and sociocultural background. Recognizing and quantifying these emotional states can pose a significant challenge, not only for human observers but also for artificial intelligence (AI) systems designed to emulate this understanding. Researchers are now exploring innovative approaches that integrate traditional psychological methods with technological advancements to bridge this gap.

In recent years, there has been a surge of interest in harnessing AI for emotion recognition, aiming to create systems that can perceive and interpret emotional cues effectively. This technological evolution has potential applications in a variety of fields, including healthcare, education, and customer service. By integrating advanced methodologies such as facial emotion recognition (FER), gesture recognition, and multi-modal emotional analysis, AI systems can enhance the understanding of human emotions, providing data that feeds into user-centered designs and services.

The significance of these technologies lies in their ability to analyze emotional responses in real time. For instance, emotion recognition systems can potentially assess and interpret reactions during a medical evaluation or an educational setting, tailoring responses accordingly. This personalized interaction has the capacity to enhance the quality of care and enrich educational experiences by making them more attuned to the emotional states of individuals.

A hallmark of emotion recognition technology is its reliance on multiple input channels, effectively constructing a comprehensive environmental picture to gauge emotional states. One promising approach combines physiological measurements such as EEG scans, which capture brain activity, with non-physiological indicators like eye movements and heart-rate variability. The process of translating subjective emotional experiences into understandable data sets for AI can render nuanced distinctions in emotional arousal.

Moreover, the adoption of multi-modal emotion recognition brings an exciting dimension to the exploration of emotions. By analyzing visual, auditory, and tactile inputs, researchers can gain a holistic view of how emotions manifest in various scenarios. This interdisciplinary collaboration between AI and traditional psychological methods creates a synergistic effect that promises to deepen our understanding of emotional behavior.

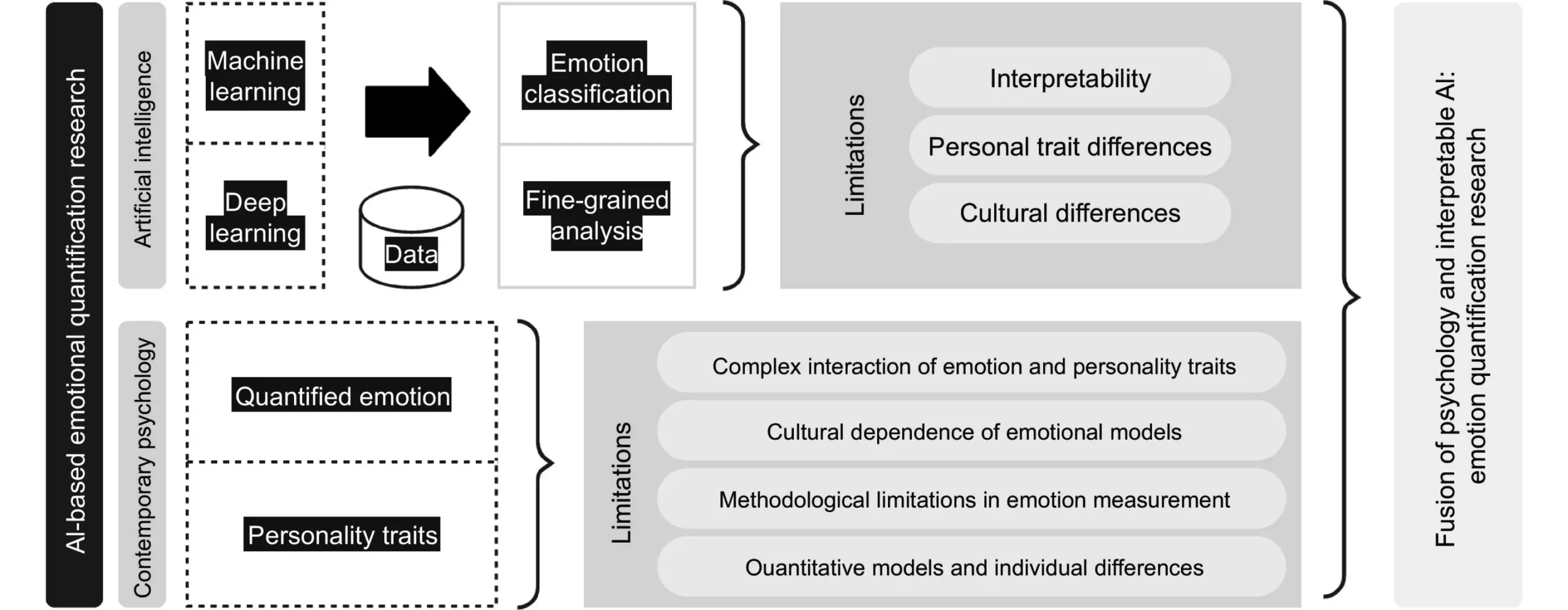

For emotion recognition technologies to reach their full potential, collaboration across disciplines—ranging from AI to psychology and psychiatry—is crucial. Such interdisciplinary alliances drive innovation by allowing teams to harness the unique strengths of various fields. This integration facilitates breakthroughs in understanding emotions and how they can be effectively quantified and identified by AI systems.

Author and researcher Feng Liu emphasizes the revolutionary potential of these technologies across diverse sectors. “By understanding human emotions, we can enhance personal experiences and forge deeper connections between humans and tech,” she states. Indeed, as mental health becomes an increasingly prioritized aspect of public welfare, the ability of AI to monitor and interpret emotional states could serve to streamline mental health assessments and interventions.

While the promise of AI in recognizing and analyzing emotions is enticing, significant ethical considerations must be addressed. Issues surrounding data privacy, safety, and transparency are paramount, especially in applications related to healthcare or psychological counseling. Those developing such systems must prioritize stringent data handling protocols to ensure the protection of sensitive emotional information.

Cultural sensitivity also poses a challenge in developing universally accurate emotion recognition algorithms. Emotion can be expressed differently across cultures, and thus systems must be designed to accommodate these differences to avoid misinterpretation and to foster trust in the technology.

The pathway toward harnessing AI in the realm of emotion recognition is both promising and intricate. As researchers and practitioners continue to explore this fusion of technology and psychology, the ultimate goal remains to create inclusive, sensitive, and effective systems that enhance human well-being. Leveraging emotion quantification technology can not only revolutionize mental health assessment and treatment but can ultimately foster deeper human interactions, paving the way for a more empathetic society. This blend of tradition and technological innovation holds the potential to profoundly transform how we understand and engage with one of the most elemental aspects of human existence: our emotions.

Leave a Reply