The realm of quantum computing is experiencing a paradigm shift, propelled by the intersection of classical algorithms and quantum systems. In a groundbreaking study, researchers from esteemed institutions, including the University of Chicago’s Department of Computer Science, the Pritzker School of Molecular Engineering, and Argonne National Laboratory, have made significant strides in simulating Gaussian boson sampling (GBS). This classical algorithm not only demystifies the intricacies inherent in existing quantum systems but also serves as a stepping stone toward enhanced collaboration between quantum and classical computing domains, offering a comprehensive approach to mitigating traditional computational barriers.

Gaussian boson sampling has emerged as a focal point in discussions about quantum advantage—which refers to the extraordinary capabilities of quantum computers to perform certain tasks more efficiently than their classical counterparts. As researchers delve deeper into GBS, they highlight how its theoretical underpinnings have established a framework suggesting that quantum systems can far surpass classical computations. Yet, the practicality of achieving such advantages has been clouded by real-world challenges typical of quantum experiments, particularly noise and photon loss, factors that dilute the potential superiority of quantum systems.

Assistant Professor Bill Fefferman emphasizes the complexities introduced by these disturbances, pointing out that while the theoretical possibilities appear promising, the reality of quantum experimentation introduces inconsistencies. Notably, leading research endeavors from institutions like the University of Science and Technology of China and Canadian quantum company Xanadu served as invaluable reference points for this latest study. Their experiments showcased that, albeit quantum devices can generate outputs consistent with GBS predictions, factors such as environmental noise often obscure clear results, driving researchers to question previous assertions of quantum advantage.

Fefferman elucidates the necessity for rigorous assessments to understand noise’s impact on quantum performance. The current study acts as a critical response to these challenges, refining our comprehension of how classical computing can complement quantum mechanics in practical implementations.

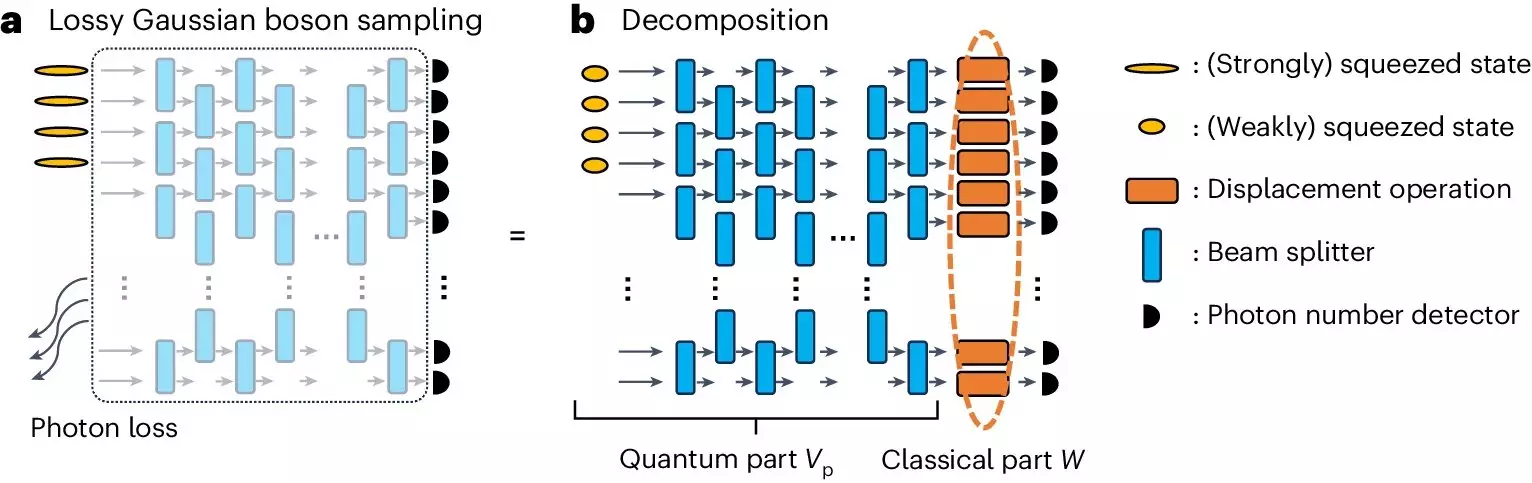

The newly developed classical simulation algorithm adeptly navigates the noisy landscape of contemporary GBS experiments by utilizing tensor-network methodologies. This approach provides a framework for effectively capturing the behavior of quantum states in environments plagued with high photon loss rates. Remarkably, this classical simulation demonstrates a performance level that surpasses several cutting-edge GBS experiments across various benchmarks, showcasing an unexpected but welcomed development in the pursuit of understanding quantum efficiency.

Fefferman likens the findings to a revelation rather than a shortcoming of quantum computing, suggesting that this progress fosters opportunities for improved algorithms and enhances the overall capability of quantum technologies. Furthermore, the algorithm’s ability to replicate the ideal output distribution of GBS states raises profound questions regarding the authenticity and scope of existing claims surrounding quantum superiority.

The outcomes of this research bear significant implications for the design of future quantum experiments. For example, by improving photon transmission rates and increasing the adeptness in generating squeezed states, researchers can potentially amplify the effective performance of GBS experiments, leading toward more reliable outputs. The study’s findings suggest not only a better understanding of the existing quantum technologies but also an enriched perspective on future enhancements in quantum devices.

Moreover, this initiative stretches beyond pure computational theory, hinting at the holistic potential of quantum technologies across various industries. With their capacity for revolutionary advancements in fields ranging from cryptography and materials science to drug discovery, the evolution of quantum computing stands to redefine approaches to complex challenges prevalent in many sectors.

The ongoing partnership between quantum and classical computing emerges as crucial in unlocking these technological advancements. As researchers persist in refining their methodologies and exploring the ramifications of computational intricacies, their collaborative efforts serve as a bridge between paradigms, promising to optimize complex operations across industries.

Fefferman’s collaborative work with esteemed colleagues, including Liang Jiang and Changhun Oh, has laid a foundation for ongoing inquiry into the capabilities of noisy intermediate-scale quantum (NISQ) devices. Their preceding research critically addressed how photon loss influences classical simulation costs and its implications for achieving quantum supremacy, capturing the essence of ongoing advancements in this dynamic field.

In essence, the development of a robust classical simulation algorithm to model Gaussian boson sampling experiments marks a pivotal advancement in the ongoing discourse around quantum computing. It unveils not only the potential coexistence of quantum and classical technologies but also acts as an essential underpinning for future research initiatives. By elucidating the challenges and refining methodologies within this atmospheric realm of computation, researchers set the stage for groundbreaking applications with the potential to revolutionize our interaction with complex computational problems, signaling a new era in both classical and quantum computing.

Leave a Reply