In recent years, the rapid advancement of large language models (LLMs) has transformed various aspects of our lives, from how we seek information to how we communicate. Applications such as ChatGPT have become integrated into daily routines, influencing everything from workplace discussions to educational environments. These AI systems possess the ability to understand and generate human language using extensive datasets, which makes them powerful tools for enhancing human cognition. Yet, the same capabilities that offer potential benefits also introduce significant challenges, particularly concerning collective intelligence—a cornerstone of effective decision-making within communities.

Understanding Collective Intelligence

Collective intelligence refers to the ability of groups to pool knowledge, skills, and insights to achieve outcomes that surpass what individuals could accomplish independently. This phenomenon is observable in diverse settings—from collaborative platforms like Wikipedia, where users contribute to a communal body of knowledge, to professional teams strategizing on complex projects. The success of such collaborations often hinges on effective communication and an inclusive process that considers diverse viewpoints. Herein lies both the opportunity and the threat presented by LLMs—they can both facilitate and hinder these intricate dynamics.

Recent research conducted by an interdisciplinary team from Copenhagen Business School and the Max Planck Institute for Human Development highlights several ways in which LLMs can enhance collective intelligence. An essential advantage of these models is their potential for increased accessibility. By breaking down language barriers through translation and providing writing assistance, LLMs enable a wider range of individuals to participate in discussions, thus enriching the diversity of thoughts and ideas. Additionally, LLMs can significantly accelerate the processes of idea generation and consensus building by organizing information, summarizing diverse opinions, and highlighting relevant points for consideration.

As Ralph Hertwig, a co-author of the study, aptly states, the challenge lies in appropriately balancing the utilization of LLMs to harness their benefits while recognizing and addressing the risks they present. Herein lies the crux of a robust discourse about the role of LLMs in shaping collective deliberation across various contexts.

Despite the potential benefits, the integration of LLMs into collective processes is fraught with challenges. One major concern is the risk of diminishing individual motivation to contribute to open-source knowledge platforms. With an increasing reliance on proprietary LLMs for information, users may become disincentivized to share their expertise on platforms like Wikipedia and Stack Overflow, which could ultimately threaten the integrity of these collaborative knowledge repositories.

Another significant concern lies in the emergence of false consensus and pluralistic ignorance, wherein individuals mistakenly believe that their opinions align with a majority. As Jason Burton, the study’s lead author, points out, LLMs are trained on readily available online data, which can generate responses that fail to represent minority viewpoints. This disparity can foster an illusion of agreement and obscure the rich tapestry of perspectives that collectively contribute to a nuanced understanding of complex issues.

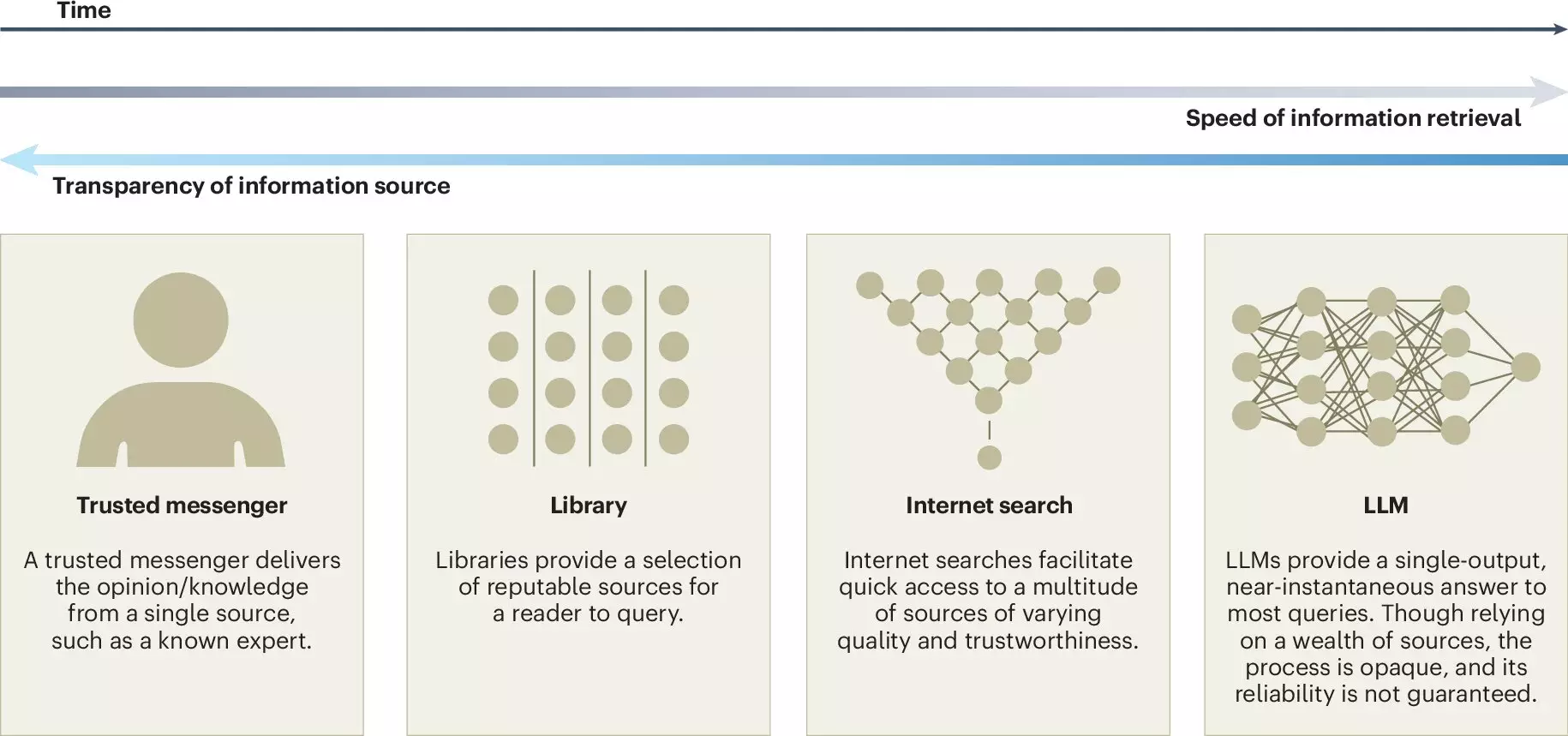

To harness the beneficial aspects of LLMs while mitigating the potential harms, the authors of the study urge for a proactive approach to their development. Recommendations include improved transparency regarding training data sources and external audits for model creators. Such measures would provide insight into the inner workings of LLMs and foster a greater understanding of their societal impact.

The study also emphasizes the importance of incorporating human perspectives in the development of LLMs. Comprehensively addressing diverse representation and ensuring that various viewpoints are included can help alleviate the risks associated with bias and homogenization of knowledge. These recommendations not only facilitate the responsible use of LLMs but also encourage ongoing research into the optimization of collective intelligence through AI.

The interplay between large language models and collective intelligence presents a complex dichotomy of opportunity and risk. As these models become increasingly embedded in the fabric of societal decision-making, it is crucial to adopt thoughtful and transparent strategies for their development and implementation. By fostering inclusivity and striving for diverse representation, it may be possible to leverage the power of LLMs to enhance human collaboration while safeguarding against the perils of misinformation and cultural homogenization. Continued dialogue and research in this space will be vital for navigating the evolving landscape of both collaborative intelligence and artificial intelligence.

Leave a Reply