Unmanned aerial vehicles (UAVs), commonly referred to as drones, have rapidly become tools of immense value in multiple domains, including environmental monitoring, mapping, and disaster response. These machines repeatedly demonstrate their efficacy by gathering data from otherwise difficult-to-access locations, thereby revolutionizing how we conceptualize and approach spatial data collection. A particularly impactful breakthrough has been introduced by a collaborative research effort between Sun Yat-Sen University and the Hong Kong University of Science and Technology, aimed at transforming autonomous UAV operations in three-dimensional environment reconstruction.

The newly developed system, known as SOAR, empowers a collective of UAVs to autonomously explore and reconstruct environments with remarkable speed and efficiency. This innovative framework intertwines the functionality of both LiDAR and visual sensors, allowing UAVs to gather comprehensive data that supports the generation of accurate 3D models. The significance of SOAR lies in its ability to harness the strengths of existing model-based and model-free approaches while mitigating their individual shortcomings.

Mingjie Zhang, one of the pivotal authors behind the paper detailing this system, pointed out that traditional methods of 3D reconstruction often suffer from being either too costly and reliant on prior knowledge or constrained by local planning limitations. SOAR aims to bridge this gap—offering speed and precision through a heterogeneous multi-UAV team that operates synergistically for optimal data collection.

At the core of SOAR’s functionality is a sophisticated incremental viewpoint generation technique that adjusts in real-time based on the information gathered by UAVs while navigating various environments. The design of the system indicates a departure from static or pre-defined task assignments, leaning instead towards adaptable strategies that optimize UAV functioning during the mission.

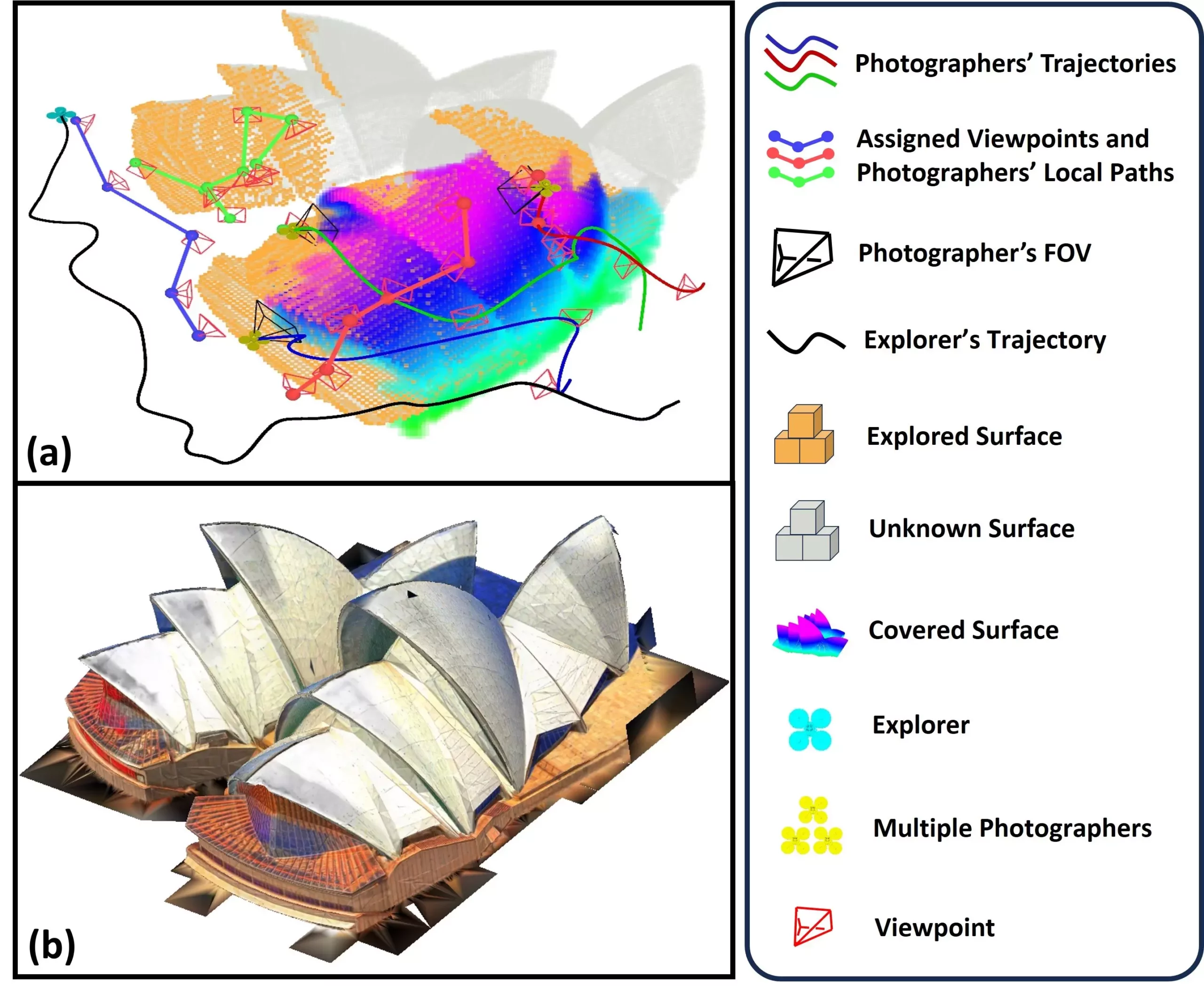

The system operates with two types of UAVs: one designated as the “explorer,” equipped with LiDAR technology for rapid environment mapping, and several “photographers” tasked with capturing images at optimally generated viewpoints. As the explorer UAV charts the environment, it continuously updates a database of key visual targets, which the photographers subsequently visit. By employing a methodology called the Consistent-MDMTSP, the system ensures that workloads are equitably distributed while maintaining overlapping task consistency.

One of the hallmark achievements associated with the SOAR framework is its ability to deliver textured 3D models derived from images captured at multiple angles and positions. This capability stems from meticulously orchestrated data collection processes, ensuring enthusiastic adherence to quality standards while performing exceedingly efficient aerial explorations.

Zhang noted that this dual-data collection approach—utilizing both LiDAR and camera technology—enables rapid environmental exploration while simultaneously maintaining high reconstruction quality. The adaptability of the system to evolving scene information is instrumental; by eliminating unnecessary detours and optimizing data acquisition paths, SOAR exemplifies a leap forward in UAV operational efficiency.

The implications of the SOAR system extend far beyond theoretical applications. It positions itself as a valuable asset in urban planning and infrastructure management, where rapid and precise environmental modeling is paramount. Additionally, it holds significant potential for preserving cultural heritage, where researchers can reconstruct and document historical sites and artifacts with advanced 3D representations.

Moreover, the system can be a game-changer in the realm of disaster response. When natural calamities occur, time is an invaluable resource. SOAR could enable first responders to swiftly ascertain damage and strategize recovery efforts by acquiring detailed geographic insights within a matter of hours rather than days.

Looking ahead, the research team aims to tackle hurdles related to the real-world deployment of SOAR. Bridging the gap between simulation and real-life environment challenges involves addressing issues such as localization errors and communication failures during UAV operations. Furthermore, the expedient development of adaptive task allocation strategies could significantly enhance UAV coordination, leading to even quicker environment mapping processes.

Incorporating active reconstruction techniques into SOAR is another avenue the team is keen to explore. By allowing real-time feedback during data collection, the UAVs can adapt their operational strategies dynamically, contributing to the overall efficiency and accuracy of the reconstruction process.

The advancements heralded by SOAR reflect a significant paradigm shift in UAV utilization for 3D reconstruction. The amalgamation of innovative data acquisition techniques, responsive system design, and expansive real-world applications positions SOAR as a pioneering model in the continuous evolution of autonomous aerial systems. As researchers look forward to overcoming existing challenges, the future promises even more transformative developments in this rapidly advancing field.

Leave a Reply