As deep learning continues to revolutionize various sectors, notably healthcare and financial services, the dependency on powerful cloud-based infrastructures raises significant cybersecurity concerns. Organizations, particularly those handling sensitive patient information, are faced with the dilemma of harnessing advanced AI technologies while safeguarding confidential data. Researchers at MIT have responded to this urgent challenge by developing a novel security protocol that integrates quantum mechanics with deep learning, ensuring protected data transmission without compromising accuracy.

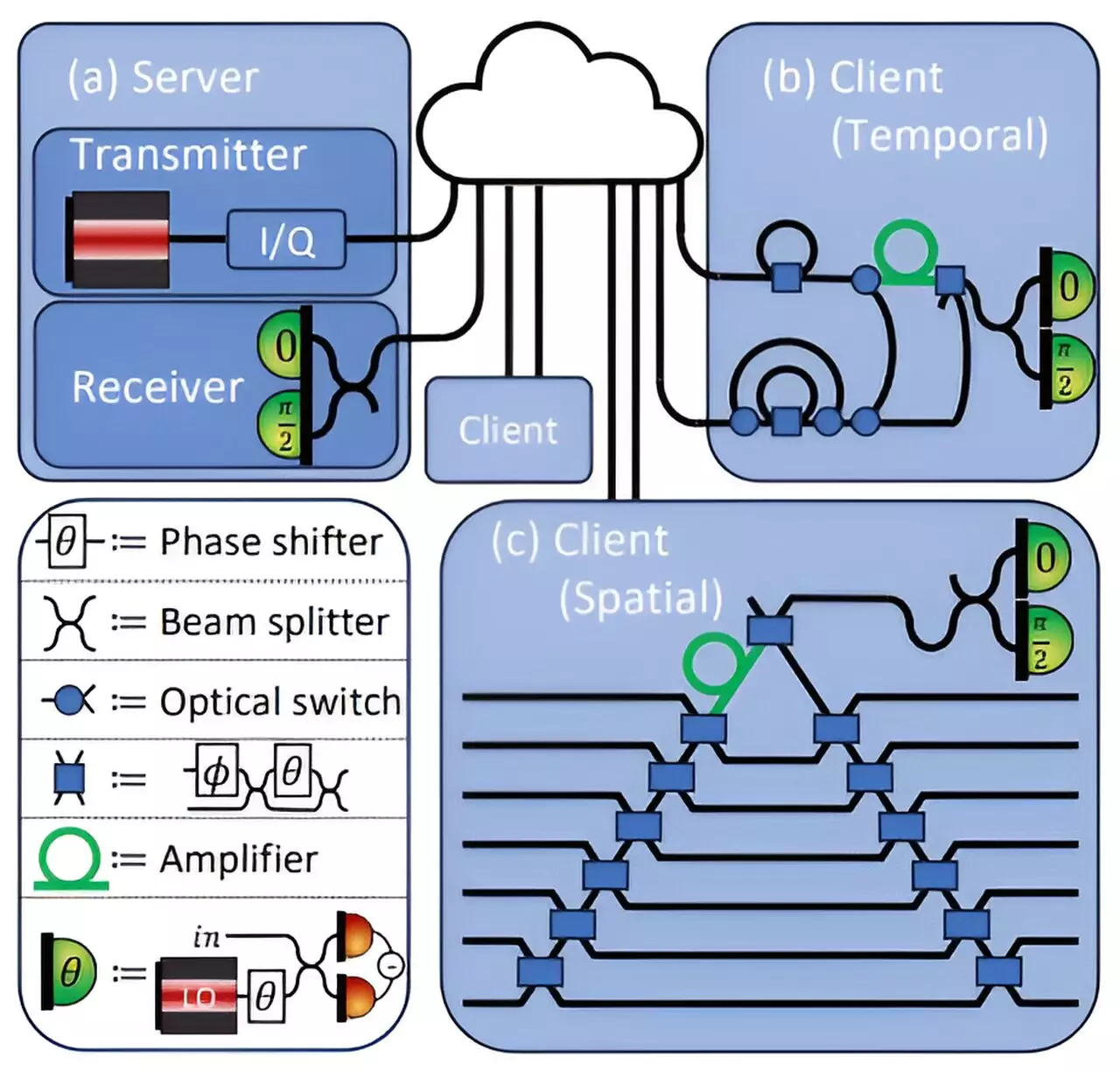

The security protocol devised by MIT researchers utilizes the unique properties of quantum light to create a secure channel for data transfer between a client—often a healthcare provider with sensitive medical data—and a deep learning server that processes this data for meaningful predictions. At the heart of this innovation is the concept of encoding data into light waves, harnessing the no-cloning principle of quantum mechanics. Unlike traditional digital data that can be copied and intercepted with relative ease, quantum data cannot be duplicated without detection, enhancing the security of the transactions.

Led by Kfir Sulimany, the MIT research team—which includes experts such as Sri Krishna Vadlamani, Ryan Hamerly, Prahlad Iyengar, and senior professor Dirk Englund—united their diverse expertise to produce this cutting-edge protocol. Their collective effort was acknowledged at the Annual Conference on Quantum Cryptography (Qcrypt 2024), showcasing the significance of merging quantum physics with artificial intelligence. Their findings shed light on a future where sensitive computations can be performed securely, paving the way for broader adoption of AI in healthcare.

This nuanced protocol operates under a sophisticated two-party scenario: the client sends proprietary, sensitive data to the server, which in turn utilizes a deep learning model to analyze it. For instance, when determining the likelihood of a cancer diagnosis through medical imaging, the patient’s data is securely transmitted to the server for analysis while safeguarding the patient’s privacy. The server encodes the neural network’s weights—essentially the parameters that govern the model’s predictions—using laser light, thus shielding both the data and the model itself from unwanted exposure.

The interaction between client and server is meticulously controlled. The client can only access the output necessary to continue feeding information into the neural network, thus preventing any opportunity to glean detailed insights about the model’s architecture or processes. This is crucial for organizations that invest countless resources in developing proprietary AI systems; ensuring that these are shielded from competitors or malicious entities is essential.

A Study in Balance: Security vs. Accuracy

One of the standout achievements of this approach is its ability to maintain a high accuracy level while offering robust security features. The MIT team reported that their protocol delivers prediction accuracy rates of up to 96%. This balance not only reassures users about the reliability of the deep learning models being utilized but also emphasizes that security need not sacrifice performance, a common misconception in technological advancements.

Furthermore, the method’s design inherently introduces minute errors during the measurement of results, which the server can analyze to ensure that no unauthorized data has been leaked. Through this intelligent mechanism of light utilization, the protocol effectively guarantees data privacy without hampering the integrity of the deep-learning capabilities.

The researchers are optimistic about the potential implications of their findings, particularly with regard to federated learning—an area of AI that enables multiple parties to collaboratively train models without sharing their raw data. This aligns perfectly with the principles of both data privacy and efficient learning, suggesting an innovative path forward for industries grappling with data security mandates.

As the MIT team looks ahead, the marriage of deep learning and quantum key distribution presents intriguing possibilities for the future. With experts such as Eleni Diamanti, who recognizes the ingenuity in employing quantum-based approaches to fortify digital privacy, the groundwork has already been laid for advanced investigations into practical implementations that could reshape the landscape of data security.

The development of quantum security protocols for deep learning is a significant leap towards addressing the challenges posed by data privacy in modern computing. Researchers at MIT have adeptly combined the realms of quantum mechanics and artificial intelligence, allowing for secure cloud-based computations that maintain the integrity of both sensitive data and proprietary algorithms. As industries increasingly rely on advanced AI solutions, such protocols will be critical in ensuring that innovations do not come at the expense of security, ultimately fostering greater confidence in the deployment of these technologies across myriad applications.

Leave a Reply