Artificial intelligence is advancing rapidly, but a team of Alberta Machine Intelligence Institute (Amii) researchers has uncovered a significant hurdle in the field. In their paper titled “Loss of Plasticity in Deep Continual Learning,” published in Nature, they delve into a puzzling issue within machine learning models that has largely gone unnoticed. The team, led by Shibhansh Dohare, J. Fernando Hernandez-Garcia, Qingfeng Lan, Parash Rahman, A. Rupam Mahmood, and Richard S. Sutton, aims to shed light on a problem that could hinder the development of advanced AI capable of functioning effectively in the real world.

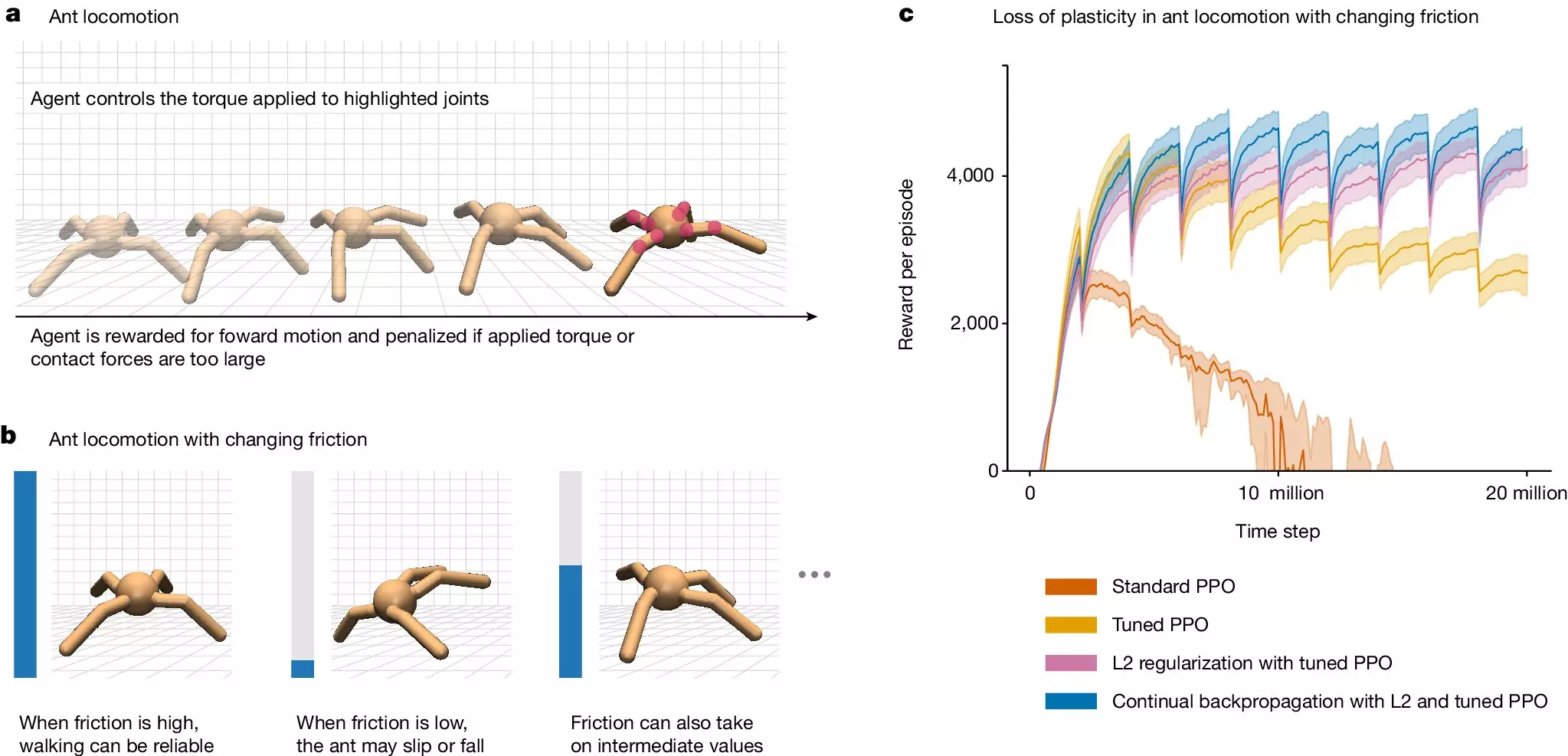

One of the key findings of the research team is the phenomenon known as “loss of plasticity” in deep learning agents engaged in continual learning. This loss of plasticity results in these AI agents losing their ability to learn new information and even forgetting previously acquired knowledge. This poses a significant challenge in developing AI systems that can adapt to the complexity of the world and exhibit human-level artificial intelligence capabilities.

Challenges of Current Deep Learning Models

The team highlights that many existing deep learning models are not designed for continual learning. They reference models like ChatGPT, which are trained for a specific period and then deployed without further learning. Incorporating new data with existing knowledge into these models’ memory can be complex and time-consuming, often requiring a complete retraining of the model. This limitation restricts the scope of tasks that these models can perform effectively.

To address the issue of loss of plasticity, the research team conducted experiments to identify its prevalence in deep learning systems. Through supervised learning tasks, they demonstrated that as networks lost the ability to learn continually, their performance in successive tasks declined. This highlighted the widespread nature of the problem in deep learning models.

In their quest to find a solution to loss of plasticity, the team developed a method called “continual backpropagation.” This modified approach aims to mitigate the effects of dead weight units in neural networks. By continually reinitializing these units during learning, the models can sustain continual learning for extended periods without losing plasticity. This approach challenges the notion that loss of plasticity is an inherent issue in deep learning networks, offering hope for the development of more adaptable AI systems.

Implications and Future Research

The team’s findings highlight the fundamental issues that deep learning models face in continual learning scenarios. By raising awareness of loss of plasticity, they hope to encourage further research into developing better solutions to address this challenge. While continual backpropagation shows promise in mitigating loss of plasticity, the field of artificial intelligence must continue to innovate and explore new methods to overcome this obstacle.

The discovery of loss of plasticity in deep continual learning represents a critical step in understanding the limitations of current AI systems. By acknowledging and addressing this challenge, researchers can pave the way for the development of more advanced and adaptable artificial intelligence capable of handling the complexities of the real world.

Leave a Reply