In a recent study published in Science Advances, a research team has made significant strides in showcasing the potential of analog hardware utilizing Electrochemical Random Access Memory (ECRAM) devices for commercialization. As the demand for artificial intelligence (AI) technology continues to grow, there is a pressing need for hardware that can keep up with the computational requirements of applications like generative AI. The limitations of traditional digital hardware have spurred active research into analog hardware specialized for AI computation.

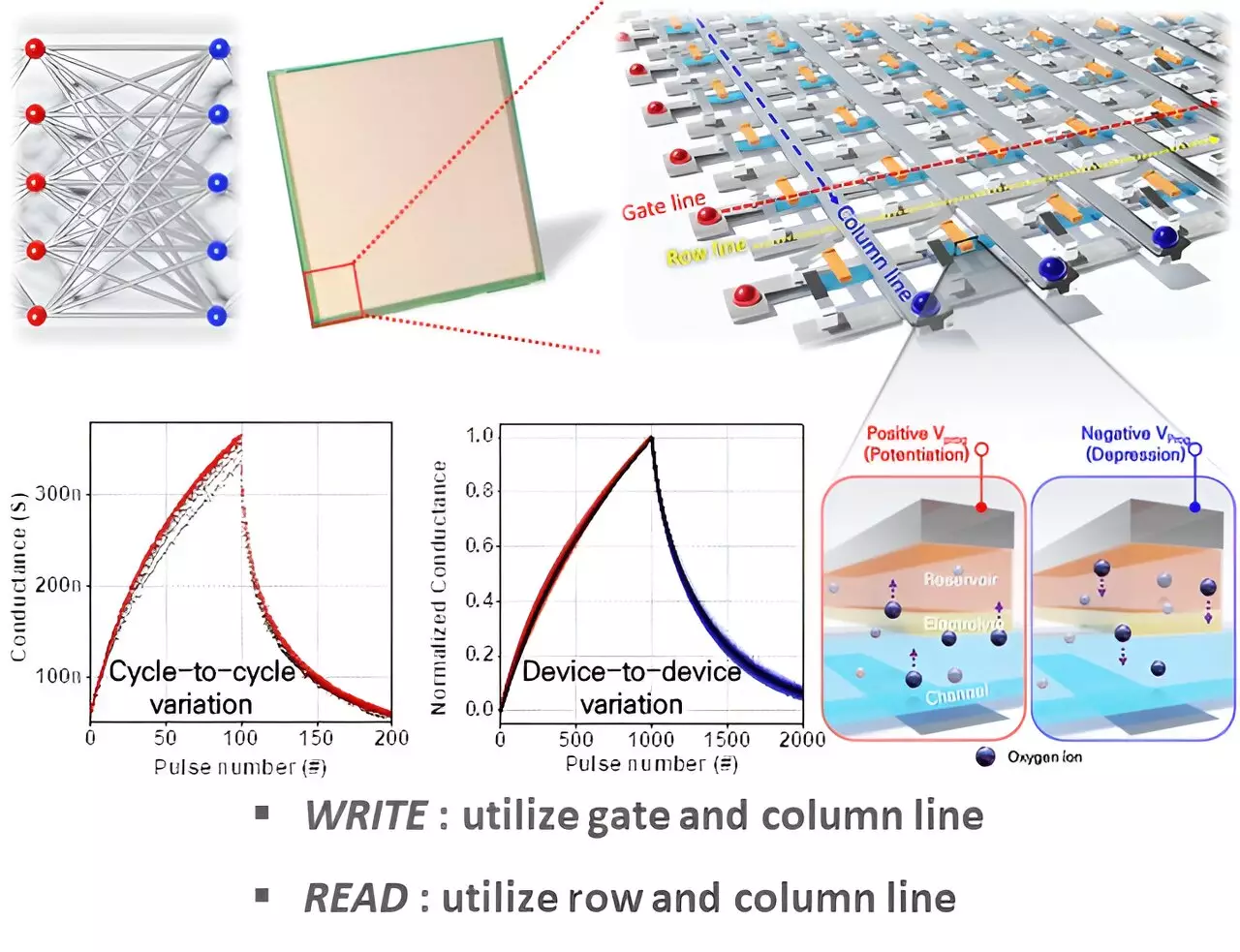

Analog hardware offers a unique approach to AI computation by adjusting the resistance of semiconductors based on external voltage or current. By utilizing a cross-point array structure with vertically crossed memory devices, analog hardware is able to process AI computation in parallel, offering advantages over digital hardware in specific computational tasks and continuous data processing. However, meeting the diverse requirements for computational learning and inference has proven to be a challenge for analog hardware.

The research team, led by Professor Seyoung Kim, focused on ECRAM devices to address the limitations of traditional semiconductor memory devices. ECRAM manages electrical conductivity through ion movement and concentration, featuring a three-terminal structure with separate paths for reading and writing data. This unique design allows for operation at relatively low power, making it a promising candidate for AI computation.

Through their study, the team successfully fabricated ECRAM devices in a 64×64 array using three-terminal-based semiconductors. Their experiments revealed that the hardware incorporating these devices demonstrated excellent electrical and switching characteristics, as well as high yield and uniformity. By applying the Tiki-Taka algorithm, an analog-based learning algorithm, the team was able to maximize the accuracy of AI neural network training computations using the ECRAM devices.

This research is groundbreaking as it represents the largest array of ECRAM devices for storing and processing analog signals reported in the literature to date. By successfully implementing these devices on a larger scale and demonstrating their effectiveness in AI neural network training, the research team has paved the way for commercializing this technology. The “weight retention” property of the hardware training technique has proven to be impactful in enhancing learning without overloading artificial neural networks.

The utilization of analog hardware with ECRAM devices shows great promise in maximizing the computational performance of artificial intelligence. By overcoming the limitations of traditional digital hardware and showcasing the potential for commercialization, this research marks a significant advancement in the field of AI hardware development.

Leave a Reply