Quantum computers have shown promise in revolutionizing information processing, especially in fields such as machine learning and optimization. However, the deployment of quantum computers on a large scale is hindered by their sensitivity to noise, leading to errors in computations. One technique proposed to tackle these errors is quantum error correction, which monitors errors in real-time and corrects them. Another approach, quantum error mitigation, runs error-filled computations till completion and then retroactively identifies and corrects errors. Despite initial optimism about the effectiveness of quantum error mitigation, recent research has shed light on its limitations.

Researchers from institutions like Massachusetts Institute of Technology and Ecole Normale Superieure in Lyon conducted a study that revealed the inefficiency of quantum error mitigation as quantum computers scale up. They found that while quantum error mitigation requires fewer resources and less precise engineering than error correction, it becomes increasingly inefficient with larger quantum circuits. One example cited in the study is the ‘zero-error extrapolation’ scheme, which aims to reduce noise by increasing it within the system, leading to scalability issues.

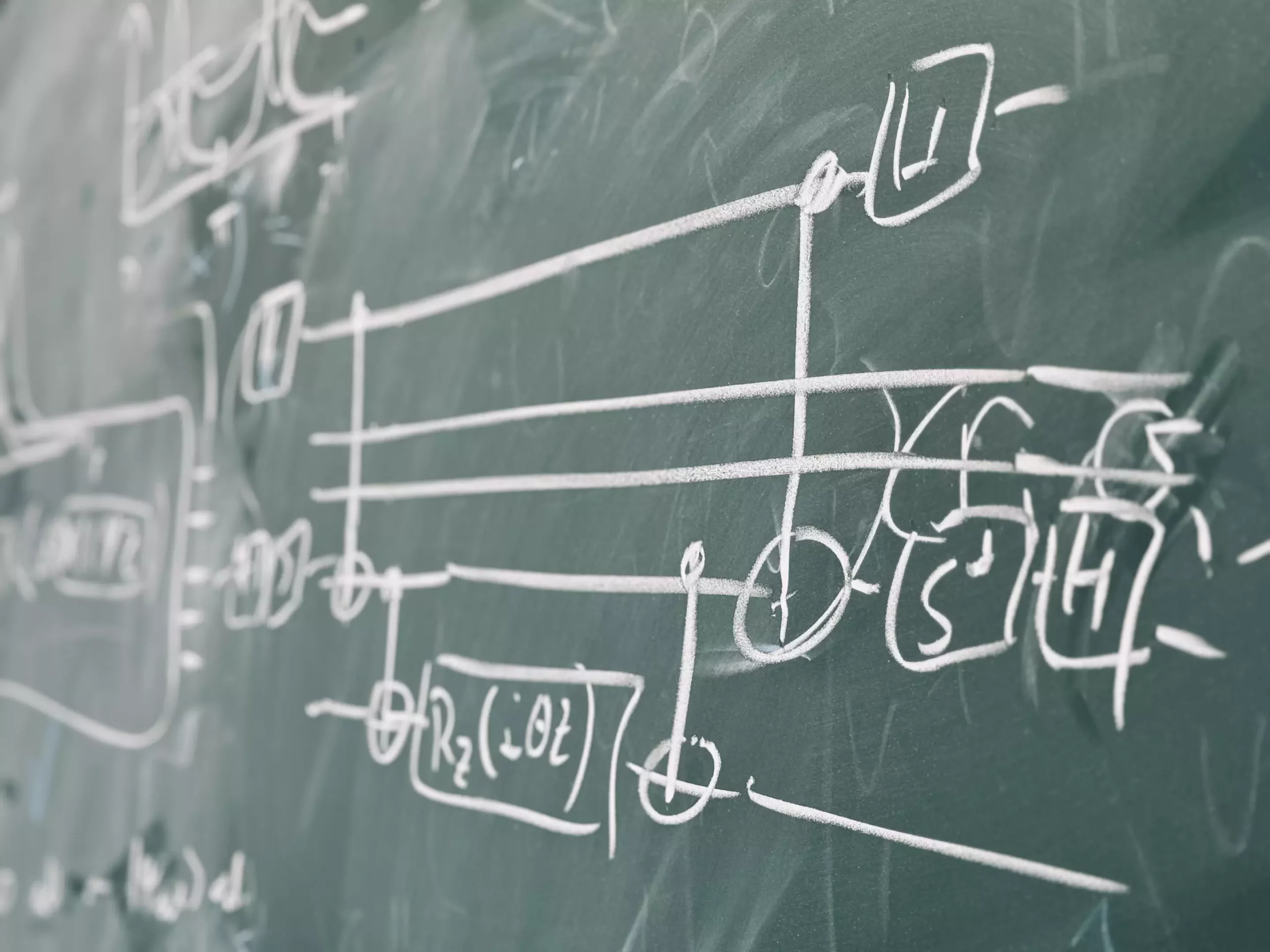

Quantum circuits, composed of layers of quantum gates, are crucial components in quantum processors. However, noisy gates introduce errors in computations at each layer, posing a challenge to the accuracy of the final result. The study highlighted that as quantum circuits deepen to perform complex computations, the accumulation of errors accelerates, rendering error mitigation ineffective. This discrepancy between computational speed and error accumulation poses a significant obstacle to the practical implementation of quantum error mitigation.

As quantum circuits scale up, the effort and resources required for error mitigation increase exponentially. The research team’s findings suggest that quantum error mitigation is not as scalable as previously anticipated, raising concerns about its long-term viability. The study serves as a cautionary tale for quantum physicists and engineers, prompting them to explore alternative approaches to mitigating quantum errors effectively. The inefficiency inherent in quantum error mitigation calls for a reevaluation of current strategies and the development of more coherent schemes.

While the research highlights the limitations of quantum error mitigation, it also opens up avenues for further exploration. By identifying the challenges associated with current mitigation schemes, researchers can focus on developing more efficient and scalable solutions. The study underscores the importance of understanding the dynamics of quantum circuits and the impact of noise on computation. By incorporating these insights into future research, quantum scientists can pave the way for advancements in quantum computing technology.

The research on the inefficiency of quantum error mitigation sheds light on the challenges that quantum computers face in addressing noise-induced errors. As quantum technology continues to evolve, the development of robust error mitigation strategies will be crucial for unlocking the full potential of quantum computing. By acknowledging the limitations of current approaches and exploring new avenues for improvement, researchers can overcome the obstacles posed by noise and enable the realization of practical quantum computing applications.

Leave a Reply