Quantum computing holds immense potential for revolutionizing various industries, from climate modeling to drug discovery. However, one major hurdle in harnessing this power is the inherent variability within quantum devices, hindering their predictability and scalability. Researchers from the University of Oxford have recently overcome this challenge using a physics-informed machine learning approach. By using mathematical and statistical methods coupled with deep learning, they have closed the “reality gap” between predicted and observed behaviors in quantum devices.

Quantum devices, also known as qubits, are fundamental building blocks of quantum computers. These devices exhibit functional variability, even when seemingly identical, due to nanoscale imperfections in the materials from which they are made. This variability poses a significant challenge to scaling and combining individual qubits effectively. Since there is no direct way to measure these nanoscale imperfections, existing simulations fail to capture the internal disorder, leading to discrepancies between predicted and observed outcomes.

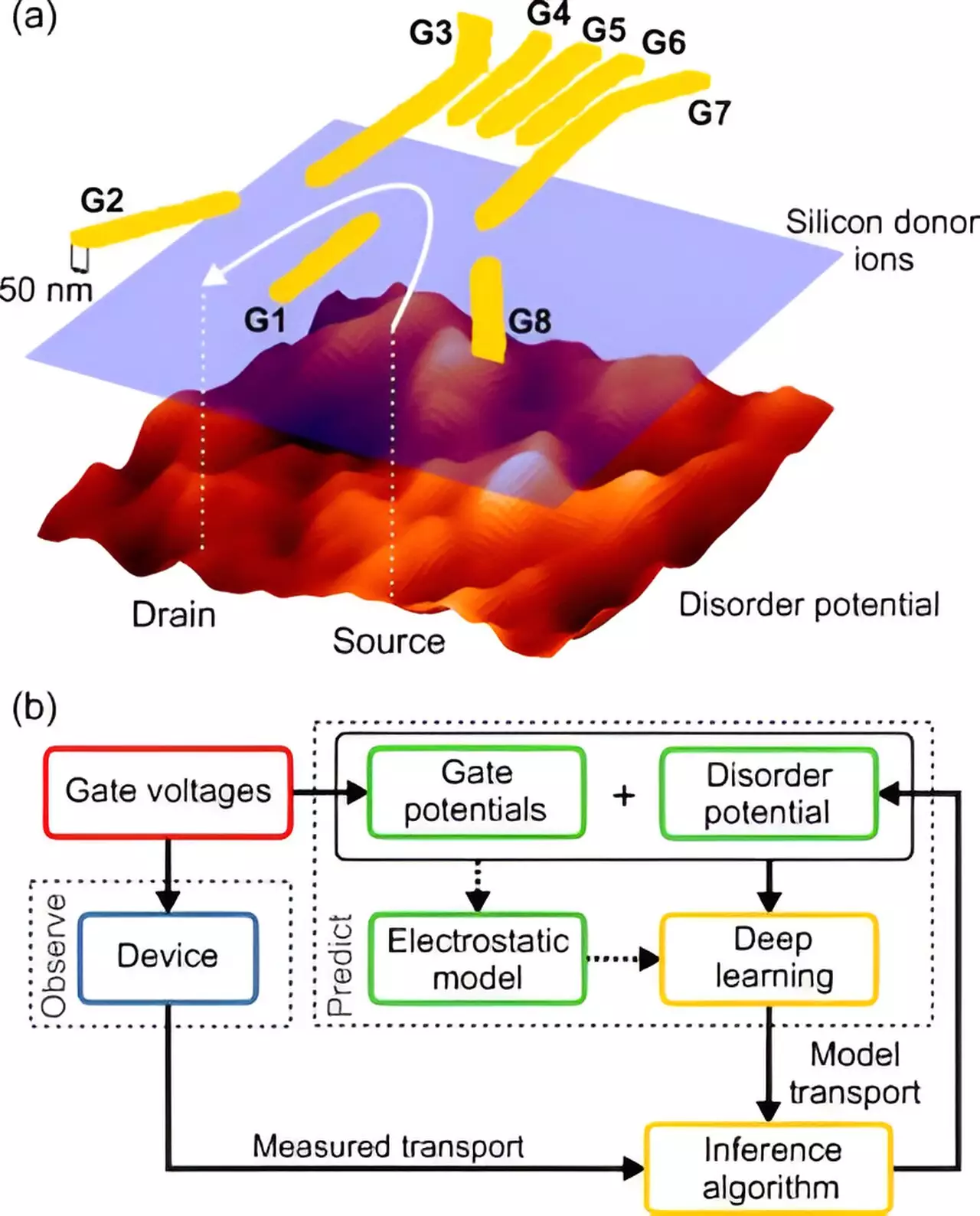

To address this challenge, the research group at the University of Oxford adopted a “physics-informed” machine learning approach. They inferred the disorder characteristics indirectly by studying how the internal disorder affected the flow of electrons through the quantum device. By measuring the output current across an individual quantum dot device at different voltage settings, the researchers inputted the data into a simulation. This simulation calculated the difference between the measured current and the theoretical current in the absence of internal disorder.

The combination of mathematical and statistical approaches with deep learning allowed the researchers to find an arrangement of internal disorder that could explain the measured current at all voltage settings. This approach yielded promising results, narrowing the reality gap in quantum devices. Lead researcher Associate Professor Natalia Ares drew an analogy to playing “crazy golf,” where predictions of a ball’s movements may differ from its actual behavior. By collecting measurements and using machine learning algorithms, one can improve predictions and bridge the reality gap.

The new model not only found suitable internal disorder profiles to describe the measured current values but also accurately predicted voltage settings required for specific device operating regimes. This breakthrough provides a valuable method for quantifying the variability between quantum devices, enabling more accurate predictions of their performance. Furthermore, this model offers insights into engineering optimum materials for quantum devices and mitigating the unwanted effects of material imperfections.

The ability to overcome the challenge of variability in quantum devices has profound implications for the advancement of quantum computing. With improved predictability and scalability, quantum computers can fulfill their potential in a wide range of applications. From climate modeling to financial forecasting and artificial intelligence, these powerful machines can tackle complex problems that were previously intractable. By harnessing the power of machine learning, researchers are paving the way for the future of quantum computing.

The University of Oxford’s research on using machine learning to overcome the variability in quantum devices marks a significant milestone in the field of quantum computing. By bridging the reality gap, researchers have taken a crucial step towards making quantum devices more predictable and scalable. This breakthrough opens up possibilities for advancing various industries and solving complex problems that were once thought unsolvable. With continued advancements in machine learning and quantum technology, the future of computing is bright.

Leave a Reply