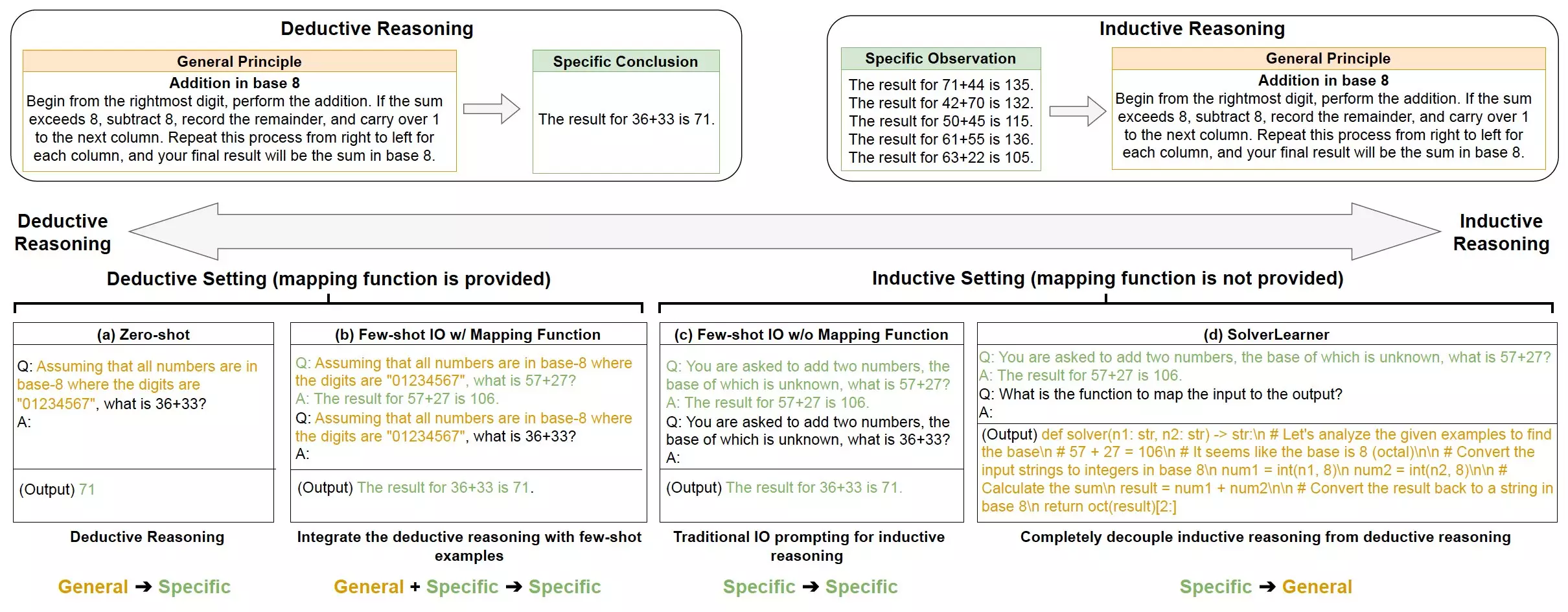

When it comes to reasoning, humans utilize deductive and inductive reasoning to draw conclusions or solve problems. Deductive reasoning involves starting from a general rule or premise and then using this rule to draw conclusions about specific cases. For example, if we know that all dogs have ears and Chihuahuas are dogs, we can deduce that Chihuahuas have ears.

Exploring Inductive Reasoning

On the other hand, inductive reasoning involves generalizing based on specific observations. This means formulating general rules based on specific instances. For instance, assuming that all swans are white because all the swans we have seen are white.

The Study on Large Language Models

A recent study conducted by a research team at Amazon and the University of California Los Angeles focused on the reasoning abilities of large language models (LLMs). These LLMs are AI systems that can process, generate, and adapt texts in human languages. The study aimed to investigate whether LLMs utilize deductive or inductive reasoning strategies.

The findings of the study indicated that LLMs exhibit strong inductive reasoning capabilities but often lack deductive reasoning skills. Specifically, when faced with “counterfactual” scenarios that deviate from the norm, LLMs struggle to apply deductive reasoning. This suggests that LLMs may be better suited for tasks that require inductive reasoning.

To better understand the reasoning processes of LLMs, the researchers introduced a new model called SolverLearner. This model separates the learning of rules from their application to specific cases. By using external tools to apply rules, the model reduces reliance on the deductive reasoning abilities of LLMs.

The study’s results have implications for AI developers looking to leverage the strengths of LLMs in specific tasks. The strong inductive reasoning capabilities of LLMs can be beneficial in scenarios where general predictions are required based on past information. Understanding the reasoning processes of LLMs can lead to the development of more efficient AI systems.

Future research in this area could focus on exploring how the ability of LLMs to compress information relates to their inductive reasoning capabilities. By gaining insights into how LLMs process and reason through data, developers can further enhance the performance of these AI systems. This research could also pave the way for advancements in AI technology in various fields.

Leave a Reply