In a recent study conducted by researchers from the University of Portsmouth and the Max Planck Institute for Innovation and Competition, it was found that a majority of participants preferred artificial intelligence (AI) over human decision-makers when it came to redistributive decisions. This shift in preference challenges the traditional belief that human decision-makers are better suited for decisions involving moral components, such as fairness. The study, published in the journal Public Choice, highlights the growing acceptance and reliance on AI in various aspects of decision-making.

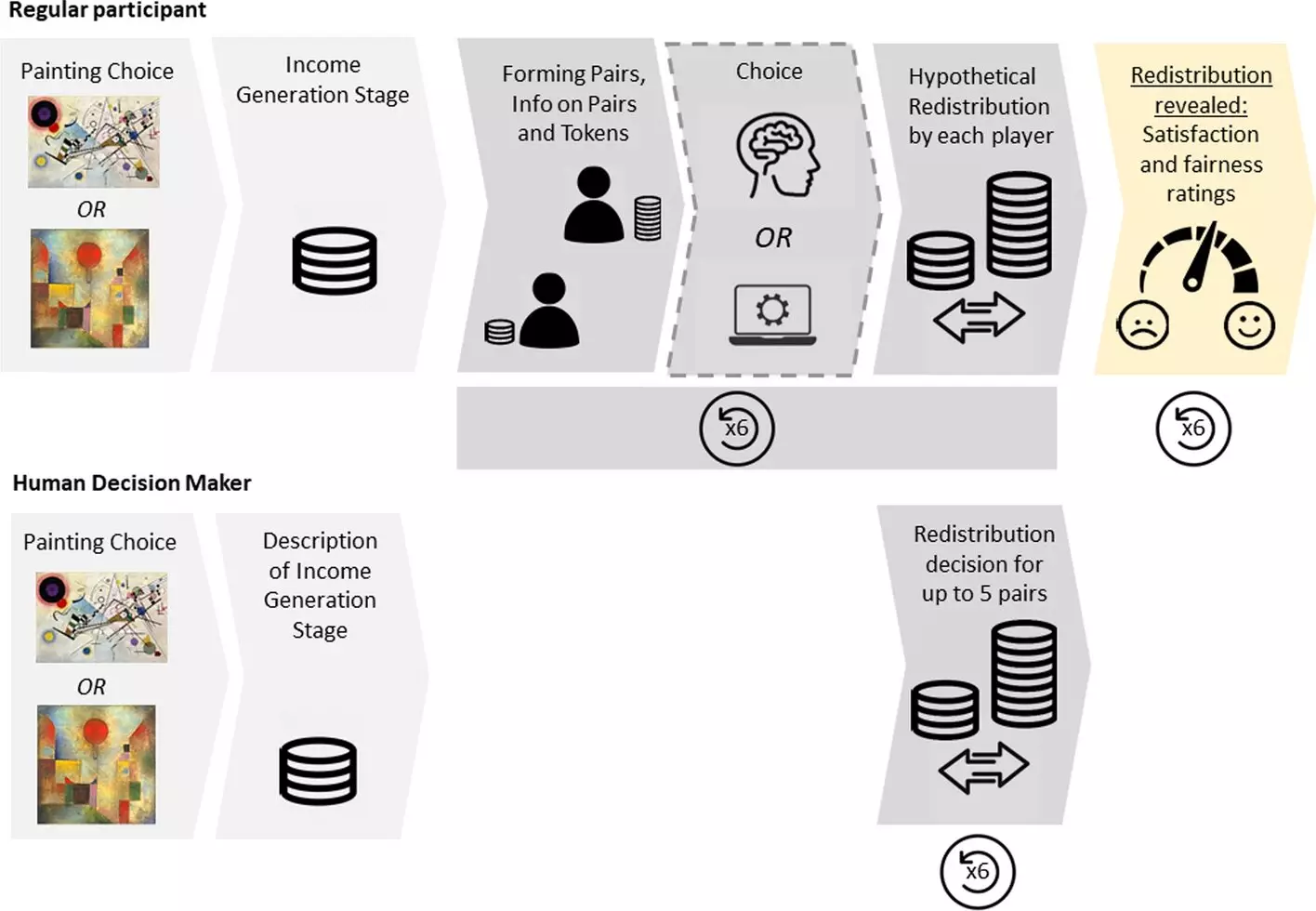

The study utilized an online decision experiment where participants from the U.K. and Germany were asked to choose between a human and an algorithm to determine how earnings would be redistributed. Surprisingly, more than 60% of participants preferred AI over humans, regardless of the potential for discrimination. This preference for algorithms showcases a growing trust in the ability of AI to make unbiased decisions, especially in scenarios involving financial redistribution.

Dr. Wolfgang Luhan, Associate Professor of Behavioral Economics at the University of Portsmouth, emphasized the significance of transparency and accountability in AI decision-making. While participants showed a willingness to embrace algorithmic decision-makers, their satisfaction and perception of fairness were lower when compared to decisions made by humans. This discrepancy highlights the importance of understanding how algorithms operate and the need for clear explanations of the decision-making process.

Challenges and Opportunities

As AI continues to be integrated into various sectors, including hiring, compensation planning, policing, and parole strategies, the study suggests that improvements in algorithm consistency could lead to greater public support for AI decision-makers. The potential for unbiased decisions and the ability to explain the decision-making process are crucial factors in increasing acceptance of AI in morally significant areas.

The findings of the study shed light on the shifting perception of AI in decision-making processes. While the majority of participants favored algorithms over humans for redistributive decisions, there is a clear need for transparency and accountability in AI decision-making to ensure public satisfaction and acceptance. As technology evolves, understanding the implications of relying on AI for critical decisions will be essential in fostering trust and confidence in algorithmic decision-makers.

Leave a Reply